Manifested AI Needs More Than Vision. What is Touch Emotion Recognition?

Manifested AI, physical AI, embodied AI — call it what you like, it is the next big shift in AI research and industry. Elon Musk, Mark Zuckerberg, Jensen Huang, and the many other big players in AI are already betting billions on this vision. Jeff Brown even claims manifested AI could ignite a $25 trillion humanoid robotics boom.

So what does Silicon Valley’s latest buzzword “manifested AI” actually mean?

It is AI that comes out from behind the screen and manifests in the real world. AI with a body, able to move through space, interact with physical objects and with us in our shared environment.

We’re already seeing early signs of this shift. Tesla is set to produce 5,000 units of its Optimus humanoid robot by 2025. Amazon is filling warehouses with AI-powered autonomous robots while also rolling out home companions like Astro. Meta is betting on mixed reality devices that merge AI with embodied avatars. Nvidia is building the simulation infrastructure (digital twins, Omniverse) to train physical AI at scale.

Manifested AI moves beyond smarter chatbots or better video generation — it’s about physical AI systems that work and live beside us, capable of perceiving, acting, and responding in the unpredictable real world.

The Missing Sense of Touch

Hardware is obviously an essential part of manifested AI. Robots need mechanical parts, sensors and actuators. On the software side, enhanced vision-based neural networks are critical. AI needs to see and understand real-world environments in order to navigate and interact safely and effectively.

But vision isn’t enough. As humans, touch is fundamental not only for interacting with objects (feeling texture, weight, shape) but also in our interactions with each other and even with animals. Touch carries emotional meaning. A pat, a squeeze, or a hug all communicate feelings in ways words often cannot.

If we are going to share our physical spaces and build humanistic relationships with AI systems, then these systems need to learn the emotional implications of touch. They need to know when a gesture is affectionate, when it signals comfort, or when it indicates frustration or anger. Without the ability to understand the emotional context of touch, manifested AI will always fall short of meaningful social interaction, confined to the role of cold, unempathetic machines.

Touch Emotion Recognition (TER)

This is where my latest research comes in. Last week, after a year-long review process, my paper titled Emotion Recognition Using Affective Touch: A Survey was finally published in IEEE Transactions on Affective Computing.

It is a comprehensive survey of what I term Touch Emotion Recognition (TER) — an emerging field in affective computing focused on interpreting human emotions through touch interactions.

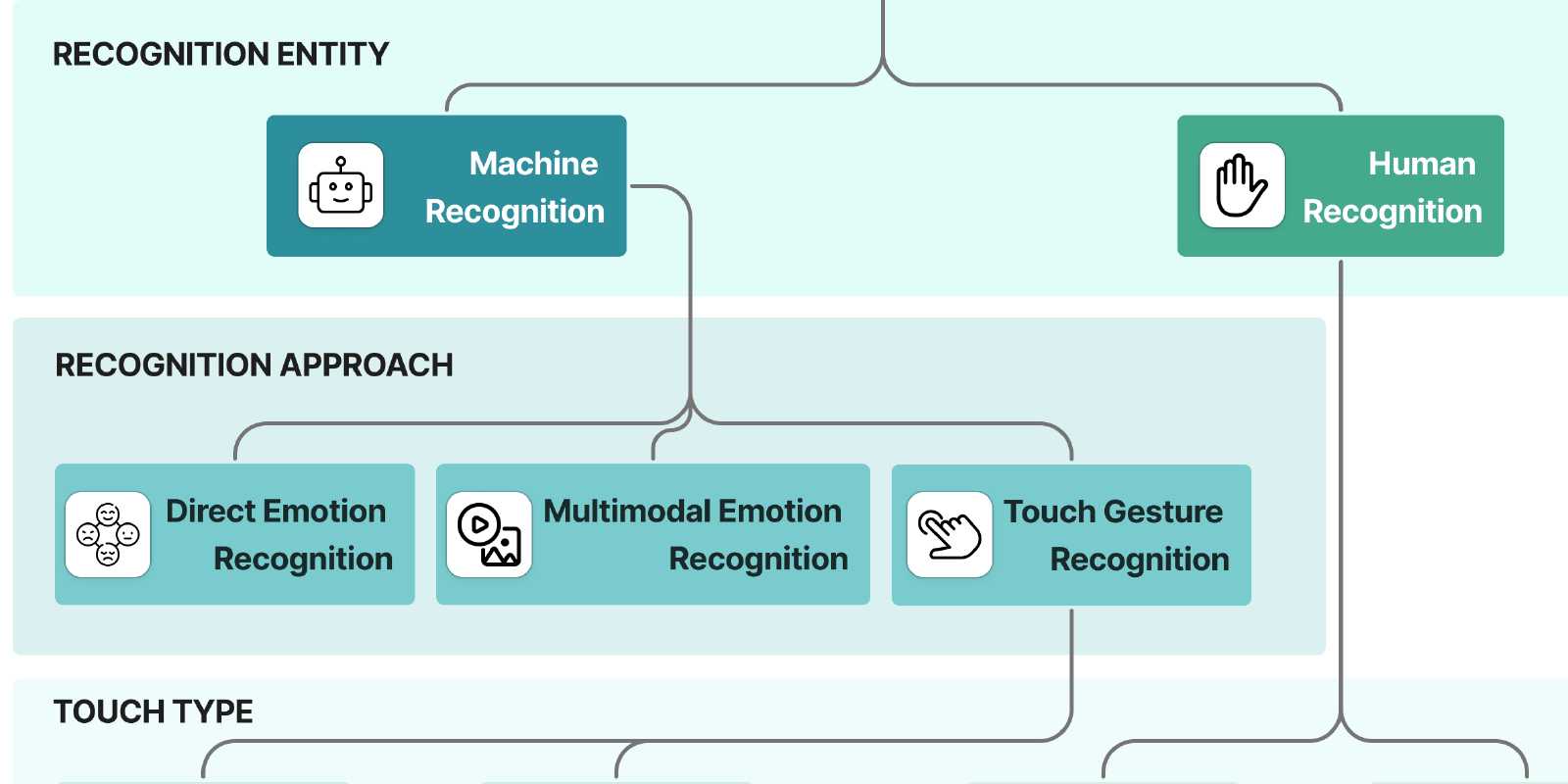

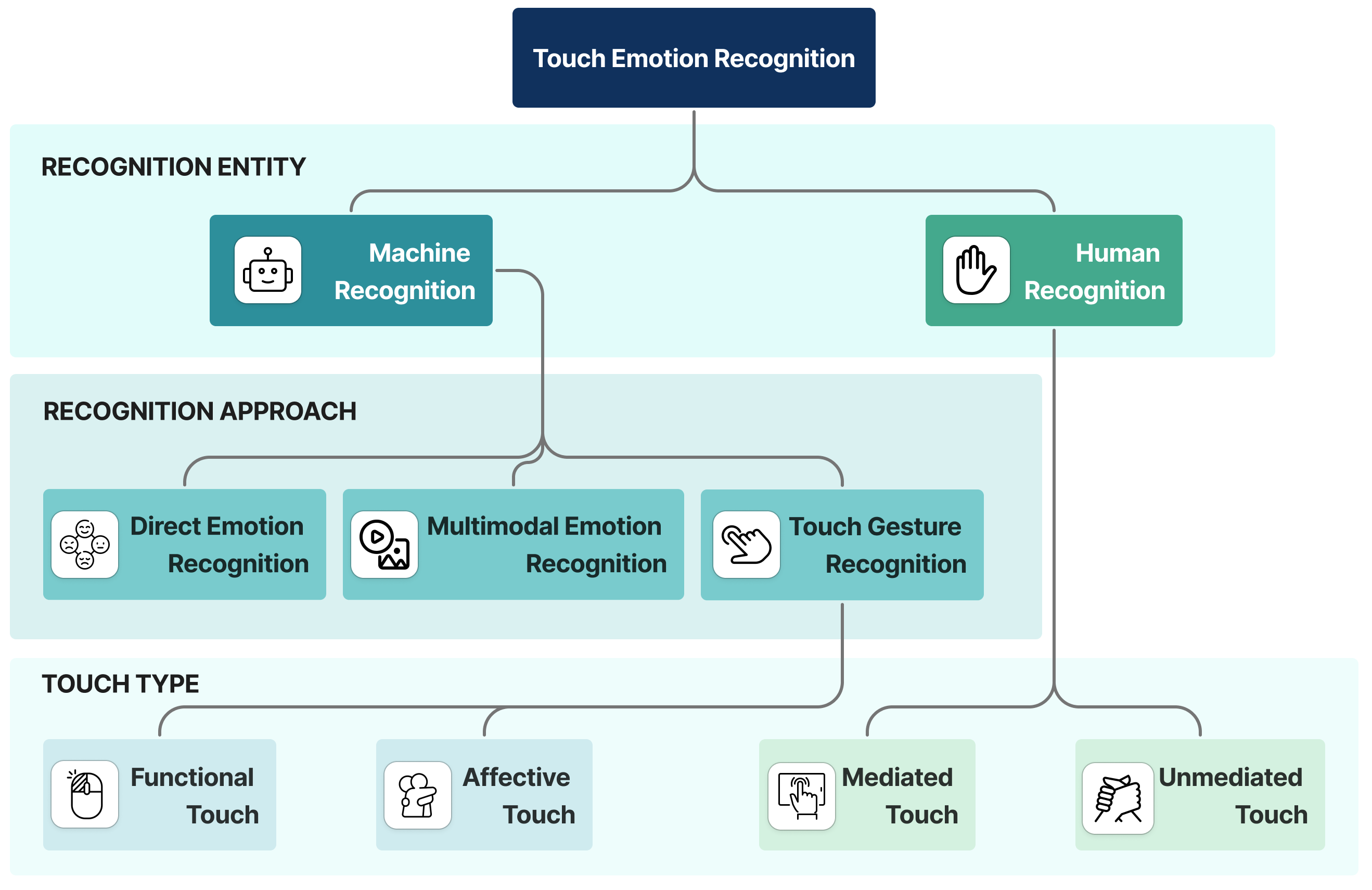

A proposed taxonomy for Touch Emotion Recognition (TER) that categorizes studies based on three key dimensions: recognition entity, recognition approach and type of touch gestures.

The paper reviews the current state of TER:

- Proposing a new taxonomy of TER research, based on recognition entities, recognition approaches, and touch gesture types.

- Summarizing available datasets of affective touch gestures, and pointing out the scarcity of high-quality, public resources.

- Reviewing hardware technologies — from robotic skins with pressure sensors to fabric-based touch interfaces.

- Outlining the methods used to process and classify touch data.

- Discussing applications in emotional AI, social robotics, VR/AR, and healthcare.

- Identifying the main challenges: limited datasets, lack of standardized devices, and cultural variability in how touch is used and interpreted.

Why It Matters

Manifested AI is more than hype. We are moving toward a world where AI systems are embodied. We will see more applications of manifested AI and social robots in our homes, classrooms, workplaces, hospitals, and public facilities. Vision and speech capabilities are progressing quickly, but without understanding touch, these systems will miss a central part of human communication.

TER is still a very young and underexplored field, but it is essential if we want AI to interact with humans appropriately in social contexts.